Imagetwin’s AI-generated image detection is now out of beta. After months of testing, development and invaluable feedback from our users, we are releasing a production-ready version.

Detecting AI-generated content in scientific papers is one of the most technically challenging areas of research integrity. However, with this release, we are delivering significantly higher accuracy, broader model coverage, and much clearer transparency for anyone reviewing flagged images.

Why This Matters

Since our beta launch, many of you have shared your experiences using this tool in real research workflows. One of the most consistent themes in your feedback was the need for more explainability, not just knowing whether an image was likely AI-generated, but understanding why it was flagged and what might have created it.

This need has become even clearer as evidence continues to show how difficult AI-generated content is to spot. A study published in Scientific Reports in November 2024 (Hartung et al., 2024) showed that even trained experts often fail to distinguish histological images from AI-generated ones. Involving over 800 participants, researchers found that although experts performed slightly better than untrained participants, they still failed to reliably identify fabricated data. This highlights why scalable, automated detection is critical to maintaining trust in scientific publishing.

Your feedback directly shaped this release. Whether you are a researcher checking your own figures, an editor reviewing submissions, or an integrity officer conducting audits, we hope these improvements make it easier to interpret results and take informed next steps.

What's New

We built this update with two goals in mind: improving detection performance and making results more transparent and actionable.

Here are some of the most important changes:

Stronger Model Performance

We switched to a more recent state-of-the-art vision model and trained it on much larger and more diverse datasets. This includes thousands of AI-generated images created through text-to-image and image-to-image workflows.Realistic Training Pipeline

Our team built a new pipeline that turns real images from published papers into modified AI versions designed to look realistic. This approach has significantly improved detection robustness across many scientific image types.Expanded Model Coverage

Detection now recognises images generated by a wider range of tools, including:Firefly (Adobe’s AI system used in Photoshop and other Adobe products)

DALL·E 3 (previously used in ChatGPT and widely adopted over the past few years)

Stable Diffusion (versions 1.6, 3.5, and XL)

Internet-generated images (AI art found through search engines or stock libraries)

Model Attribution for More Transparency

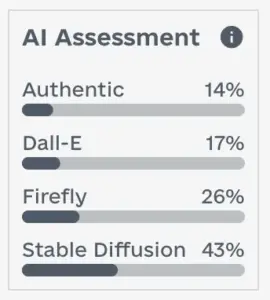

Previously, users only received a confidence score indicating the likelihood an image was AI-generated. Now, each flagged image includes an “AI assessment” showing a distribution of which generator the system believes was used. For example, you may see a score indicating higher probability of generation by DALL·E compared to Firefly or Stable Diffusion. This gives reviewers and editors more context to evaluate flagged cases.

Improved User Interface

Clicking on any detected issue in the overview now opens the detail view displaying the confidence score and the likely distribution across AI models.

Now Fully Supported

With these improvements in place, we are confident this feature is ready to move out of beta. AI-generated image detection is now an established part of Imagetwin’s integrity toolkit, alongside Image Duplication, Plagiarism, and Manipulation detection.

We will continue to refine the system, expand our training datasets, and improve model attribution as new generative tools emerge.

If you have questions, ideas, or suggestions, please get in touch with our team! Your input has been critical to getting us here, and we look forward to supporting you as this technology evolves.

Try It Today

Log in to your Imagetwin account to start scanning papers with AI assessments included, or contact our team.

Thank you for helping us preserve image integrity in research.